Sunday's day off was under sunny skies and was filled with an incredible variety of sporting activities including hiking, bike riding, sailing (including multiple capsizing and rescue of Matteo who did not properly set the Laser sailboat rudder) scuba diving, beach volleyball, tennis, and even interesting "Battle Line" card gaming after dinner.

This morning, we started off after breakfast with an update from Giacomo about the COVID situation. There are 2 more positive tests since last update, plus 2 more testing with apparent symptoms. There are 5 or more definite positives now who are isolating in hotel rooms. Everyone is masking now and assuming they are positive. Everyone is reminded to fill out the daily poll to update the COVID assessment poll. There was a disappointing lack of responses with only 36 filling it out by dinner yesterday despite personal reminders during dinner.

Self intros

- Elisa Vianello - New technologies not just algorithms

- Sabina from Konstanz - Creativity is a precondition for learning

- Rodgigo Laje Argentina - Paradoxical memory for paradoxical networks

- Ben Grewe - Bioplausical learning priors hierarchical symbolic pyramidal

- Arko Gosh - Real world behavior hidden brain function

Memory and plasticity in technology

Melika introduced this session with the first one devoted to hardware and technologies

Emre Neftci started off by pointing out that one of the hidden white boards shows that cortex tries to minimize plasticity from genetic development.

One of the most promising set of rules is gradient descent. The reason GD is good because we can defne a loss function whose gradient wrt weights and inputs can computed to assign credit for error to specific connections in the network, via the well known chain rule as illustrated in the 3 factors in the equation below

This rule works really well and has been convincingly shown to train accurate SNNs in the past few years.

However there are some problems.

- Fading signals

- Global propagation

- Data hungry

- Data imbalance and non IID data

- May not work in RNNs

- Noise?

- It i s very expensive and difficult to copy data around (in mixed signal HW especially)

- Needs high precision for small gradients

Emre concluded that it makes it impossible to learn tabula rasa this way, nor does it make sense when the base behavior can simply be printed to the chip like biology does with development. However, it does make a lot of sense to include such mechanisms to continually adapt a functional system.

Gregor Scholkopf pointed out examples from Zebrafish babies that can easily be shown to adapt to looming stimuli, gratings that get the fish to swim, etc., and fish can very flexibly control their threshold or feedback gain. Of course such relative simple adaptive control of gain or other system parameters has been explored since the 1970s in adaptive control of dynamical systems, but with small industrial impact because of the complexity of the mathematics and the difficulty of proving stability and robustness to disturbances.

Now Damien Querlioz took over and and started off by positing that real systems would need a LOT of weights to change. Thus using DRAM, the most widely used memory that is very cheap and fast is not ideal, because DRAM energy access is expensive. So CNN accelerators reuse the memory access massively to amortize the cost. However for learning or SNNs (that are both somewhat unpredictable) it becomes really hard to efficiently use DRAM.

What about flash memory? It seems very attractive, but flash is very specialized process that is not compatible with normal CMOS and not widely available especially to academic R&D.

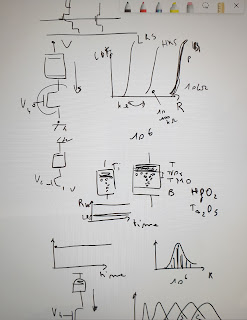

Elisa points out that there is commodity 3D VNAND flash that is not available at all except for mass storage and also available 28nm CMOS compatible monolithic flash of moderate density. She sketched this structure showing the control gate (CG) and the floating gate (G).

Then Damien pointed out the other problem is high voltages necessary.

Then Damien described RRAM and MRAM memristor technologies. RRAM allows changing the resistance between two terminals based on history of voltage across the terminals. By using small voltages the R can be measured. There is already one smartwatch in MP that uses MRAM. The advantages for embedded systems of such memory are CMOS compatible, scale better, require less high voltages, and have much faster read times than flash.

The biggest problem are errors in massive numbers of devices. There will always be lots of devices that out far out on the limbs of the statistical distributions but with binary memory these variations can be designed for, using error correcting codes (ECC) such that bit error rates of up to 1e-6 can be tolerated at the system level because the error rate is reduced to 1e-15.

Such codes are "neuromorphic" in the sense that they are distributed codes that very effectively achieve high reliability at the system level. Such errors might be tolerated in DNNs, however.

ECC error correction in cache memories for processors adds delay which makes it hard to use this ECC nonvolatile memory for very high speed core memories.

Next Elisa Vianello took over and posited that these new types of nonvolatile memories will completely take over and change how we design future embedded systems.

Nowadays high densities are possible via crossbar architectures that can be layered. There are still many problems however with parasitic crosstalk between other devices that are not intended to be read. Also parasitic metal resistance changes voltage along the lines.... there are many such practical problems.

To solve this problem people put an "access" device, typically MOS transistor, in series with the crossbar element.

However this access device kills the density and is hard to make work with 3D structures. In 22nm FDSOI this memory is half the size of an SRAM cell so it can be very attractive commercially, e.g. ST is in MP for microcontrollers with this approach. The devices go through one "Forming" step during production. The bits in a memory are moved from initial very high resistance (R) to low resistance state (LRS, 2-6k Ohms). Then they can be toggled to high resistance state (HRS 10s-100s k Ohm).

The RRAM devices are not symmetric, the top electrode is different than bottom one. They use titanium hafnium and Tantalum oxides. The oxygen vacancies move to form filaments of low resistance. The filaments form along grain boundaries and so look a bit like lightning strikes.

These choices of material affect retention times. They can now produce RRAM that is stable over 250 deg C soldering temperatures. However the programmed resistance values are either normally (for small R) or log normally (for large R) distributed. The low R states are quite uniform but the high R states are widely distributed.

The last point from Elisa concerned multibit storage via multilevel storage. The FET can be used to program various resistance values and can be used in principle to program distinguishable values. The problem is overlap of bits:

After the break, we continued with more aspects of memory and plasticity with focus on on-chip analog learning.

Emre started by pointing out that the session is about what such technologies are good at and not good at doing.

Elisa continued by summarizing that we started with a summary of how such technology can be used for digital symbol storage. Can they be used for processing in memory (PIM) for the multiply accumulate (MAC) operations.

Yigit from INI has been working on this aim.

He wrote an ODE to describe continuous dynamics of a synapse with eligibility trace eij. eij is the lowpass filtered variable that is used to update the weight via some other rule. eij decays to zero and is also changed by the product of some rate n, and f(xj) and g(xj). f and g are related to the particular learning rule and pre and post synaptic activity. The decay time scale in on the order of tens of ms to s.

The result of this synaptic weight change is that G (the conductance) is increased quickly, then decays back to some steady state modified value.

Now Emre turned to Damien to bring up yet another scheme for analog learning. He drew again drww the plot of the distribution of programmed conductances (similar to Elisa's) that shows big variability of the programmed conductance values:

MCMC Metropolis Hastings Markov Chain Monte Carlo is an algorithm that samples the weights over and over again to obtain a collection of models that are not so good for big data but are very good for small networks that must operate with huge programming variability.

In practice they used MCMC with 16k weights to recognize mamogram patterns better than the device simulation predicted. Now there is a lot of effort in this area.

By running many chains in parallel it is possible accelerate at least the concurrent part of MCMC. Not intended for mass production programming but aimed at in-the-field programming.

Emre took over again and brought up the inclusion of "inductive biases" (i.e. assumed priors from the problem domain) to reduce the training effort. One of these is the inclusion of expected connectivity patterns.

Melika Paywand took over then and described their work with ST to make such systems more practical with the inclusion of inductive biasing. She pointed out that the connectivity patterns tend to be local so the weight matrix is most dense diagonally with sparser connections off diagonal, an observation that was perhaps at least partially inspired by past observations of such small-world connectivity patterns from Henry Kennedy and others from past workshops.

The single crossbar array is broken into smaller arrays with special routing cells that can make longer range connections. Such a network on chip (NOC) is used in many existing chips like all the larger neuromorphic systems like TrueNorth, SpiNNaker, and many other commercial digital massively parallel chips. Also many mainstream AI hardware accelerators use such routing fabrics to route data from memory to processing elements (PEs) and between PEs. This project however is among the first to try such NOC for memristive crossbar PIM.

xxx

Next Fabien XXX took over. He described an iterative programming process that assumes a feedforward architecture that can compensate for programming variability.

he then described some "Molecular electronics" by playing a little video of the growth of processes between electrodes in a 2D preparation. The scales is that the processes are about 20um wide and grow over a few minutes.

Next Rodney Douglas took over to describe some results from his long interest and work on development of self-constructing networks, with Gaby Michels, Stan Kerstjens, and others who have previously participated in the workshop including collaboration for cortical genetic expression data from the lab of Henry Kennedy in France.

The thing that he finds very compelling is that these biological neural networks grow and connect themselves with just handful of basic instructions like moving and turning.

A key observation from von Neumann is that the instructions must be either 1d or 2d, not 3d. The resulting self constructing objects must have a 1 or 2d structure to store the replication instructions.

- The concept is that there is a machine instruction, but the instructions themselves must be reconstructed.

- Currently brain research focuses on understanding the constructed brain, not the instructions that construct it in the first place.

Kolmogorov and Greg Chaiten complexity are used to quantify complexity and information.

Paul asked what is the actual # bits used only for nervous system, but of course most of DNA is used for all body cells, so it is hard to split out the brain part.

One system they looked at is cortex. The precursor cells form a sheet from the progenitor neuron and start to migrate upwards to form the cortical layers forming layer 6, layer 5, layer 4, etc... The sheet has all types of cells. They migrate and grow axons according to a small number of rules that are plausibly encoded in genetic programs and they have built detailed model of the complete self construction of a 6 layered cortical sheet.

The other project (with Stan Kerstjens) to deal with how it is that the brain establishes its connection matrix? Given a few cells at the top, is there a way to get them to wire up to other cells in a different place? The key observation is that is possible to encode a symbolic "address space" that enables wiring over a large range of scales by using this idea of a genetic address space encoding.

Emre then concluded the session by reiterating that learning devices can be engineered by using

- Structural plasticity

- Evolutionary approaches

The problem is each time data is presented, all approaches take a different path through weight space depending on the samples and order. The point is that there are techniques that can learn effectively with small number of bits of precision by using a meta learning techniques.

This interesting morning of technology brought us to lunch against a backdrop of an immense cruise ship that had anchored this morning next to Alghero and increasing worry about a possible COVID outbreak that would force cancellation of the rest of the workshop. Participants gathered mainly in the open air porch outside the hotel bar to continue working on their projects.

Comments

Post a Comment